OpenAI declared on Tuesday that it will begin providing Advanced Voice Mode (AVM) to a larger group of ChatGPT’s paying users.

Initially available to users of ChatGPT’s Plus and Teams levels, the audio capability makes ChatGPT more conversational. Customers of Enterprise and Edu will begin to have access the following week.

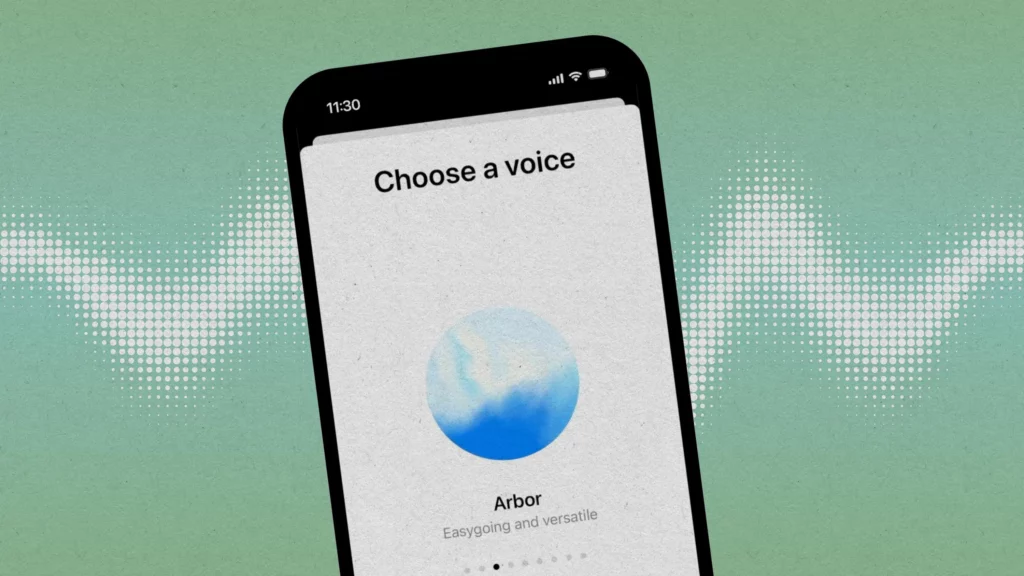

As part of the launch, AVM is receiving a new look. OpenAI replaced the moving black dots that accompanied the feature during its May technical showcase with a blue animated sphere.

When AVM is made available to users, they will see a pop-up next to the voice icon in the ChatGPT app.

Over the course of the week, Advanced Voice will be available to all Plus and Team users on the ChatGPT app.

We’ve enhanced accents, added five new voices, added memory, and added custom instructions while you patiently waited.

Arbor, Maple, Sol, Spruce, and Vale are the five new voices that users can test out on ChatGPT. With this addition, ChatGPT now has nine voices overall—nearly as many as Google’s Gemini Live—in addition to Breeze, Juniper, Cove, and Ember. It’s possible that the inspiration for all of these names came from nature, as the goal of AVM is to make using ChatGPT feel more organic.

Scarlett Johansson threatened to sue OpenAI after the company revealed Sky’s voice during its spring update. Sky is one voice that is absent from this roster. In the feature film “Her,” the actress—who played an AI system—said that Sky’s voice was a touch too close to her own. Despite multiple staff members mentioning the movie in tweets at the time, OpenAI quickly deleted Sky’s voice, claiming it never intended to sound like Johansson’s.

READ MORE: Microsoft, OpenAI, And Nvidia Are Being Probed Over Monopoly Rules

The video and screen sharing capabilities of ChatGPT, which OpenAI introduced in its spring update four months ago, are also absent from this release. GPT-4o is expected to be able to handle visual and auditory data simultaneously thanks to its functionality. An employee of OpenAI demonstrated during the demo how you could ask ChatGPT questions about math in real time on paper or on your computer screen when you were using code. OpenAI is not yet providing an estimated date for the debut of these multimodal features.

Nevertheless, OpenAI claims to have improved since launching its limited alpha test of AVM. According to the business, ChatGPT’s speech component can now recognize accents more accurately, and the talks are also faster and more fluid. We discovered that bugs were widespread throughout our testing with AVM, but the business says that’s improved.

Additionally, ChatGPT’s customization tools are being extended to AVM by OpenAI. These features include Memory, which enables ChatGPT to store conversations for future usage, and Custom Instructions, which let users customize how ChatGPT reacts to them.

A representative for OpenAI claims that AVM is not yet accessible in a number of areas, including the EU, the UK, Switzerland, Iceland, Norway, and Liechtenstein.

Radiant TV, offering to elevate your entertainment game! Movies, TV series, exclusive interviews, music, and more—download now on various devices, including iPhones, Androids, smart TVs, Apple TV, Fire Stick, and more.