Is it possible for artificial intelligence (AI) to become hungry? Have you developed a liking for certain foods? Not yet, but a team of Penn State researchers is working on a revolutionary electronic tongue that simulates how taste influences what humans eat depending on both needs and desires, perhaps giving a blueprint for AI that processes information more like a human being.

Human conduct is complicated, a hazy compromise and interaction of our physiological demands and psychological desires. While artificial intelligence has advanced significantly in recent years, AI systems do not account for the psychological aspects of human intellect. Emotional intelligence, for example, is rarely viewed as part of AI.

RELATED: Amazon Makes A $4 Billion Investment In Anthropic, An Artificial Intelligence Startup

“The main focus of our work was how we could bring the emotional part of intelligence to AI,” said Saptarshi Das, an associate professor of engineering science and mechanics at Penn State and the study’s corresponding author.

“Emotion is a broad field and many researchers study psychology; however, for computer engineers, mathematical models and diverse data sets are essential for design purposes. Human behavior is easy to observe but difficult to quantify, making it challenging to imitate and construct emotionally sophisticated robots. There is currently no practical way to accomplish this.”

Das observed that our eating patterns are an excellent example of emotional intelligence and the connection of the body’s physiological and psychological states. The act of gustation, which refers to how our sense of taste helps us determine what to eat based on flavor preferences, has a large influence on what we eat. This is distinct from hunger, which is the physiological motivation for eating.

RELATED: Stability AI Introduces Stable Audio — Create Music With Artificial Intelligence

“If you are someone fortunate to have all possible food choices, you will choose the foods you like most,” he stated. “You are not going to choose something that is very bitter, but likely try for something sweeter, correct?”

Anyone who has felt full after a heavy lunch but was nevertheless tempted by a slice of chocolate cake at an afternoon business party understands that a person can eat something they enjoy even when they are not hungry.

“If you are given food that is sweet, you would eat it in spite of your physiological condition being satisfied, unlike if someone gave you say a hunk of meat,” he remarked. “Your psychological condition still wants to be satisfied, so you will have the urge to eat the sweets even when not hungry.”

RELATED: How Artificial Intelligence Is Assisting In The Matching Of Medications To Patients

While there are many unanswered questions about the neuronal circuits and molecular-level mechanisms within the brain that underpin hunger perception and appetite control, Das said that advances in brain imaging have provided more information on how these circuits function in terms of gustation.

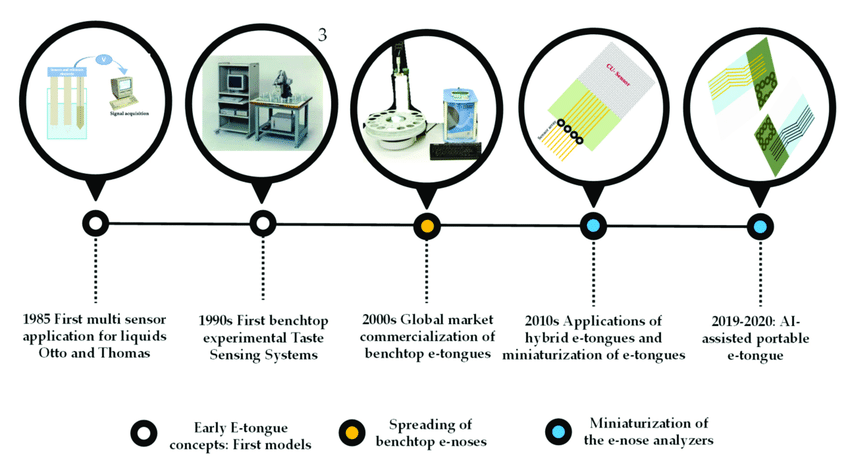

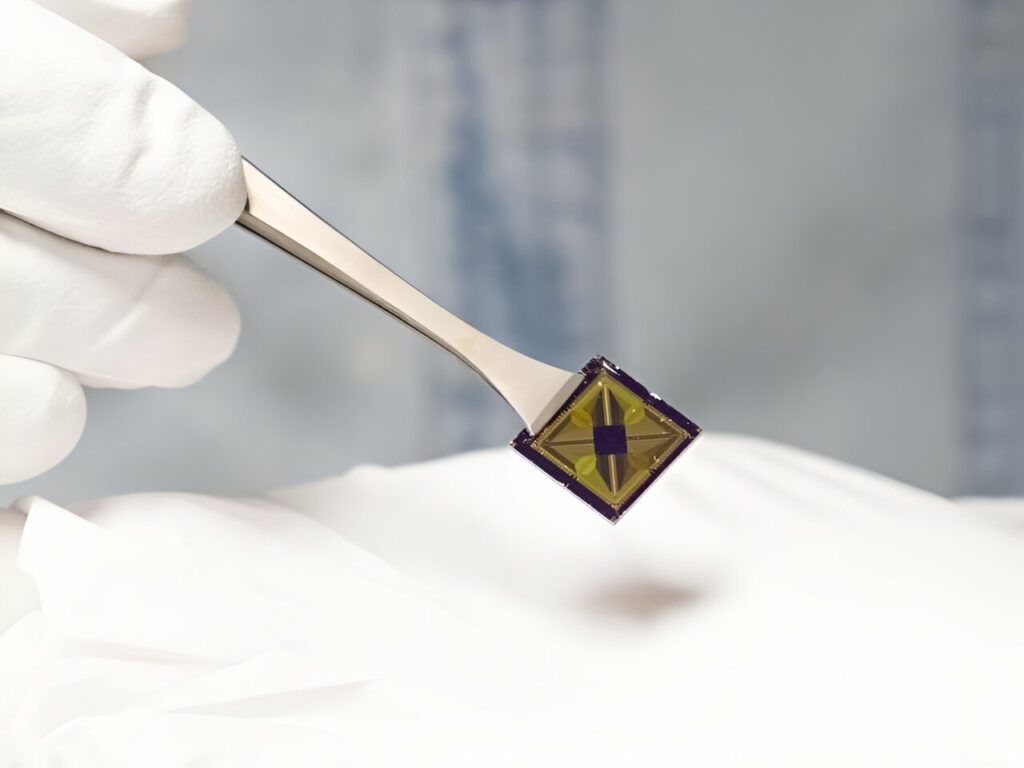

Chemical data is converted into electrical impulses by taste receptors on the human tongue. These impulses are subsequently transmitted through neurons to the brain’s gustatory cortex, where cortical circuits, a complicated network of neurons in the brain, create our taste sense. The researchers created a simplified biomimetic version of this mechanism, which includes an electronic “tongue” and an electronic “gustatory cortex” constructed of two-dimensional (2D) materials that are one to a few atoms thick.

The artificial tastebuds are made up of chemitransistors, which are tiny graphene-based electrical sensors capable of detecting gas or chemical molecules. The other component of the circuit employs memtransistors, which are molybdenum disulfide transistors that remember previous signals. The researchers were able to create a “electronic gustatory cortex” by connecting a physiology-driven “hunger neuron,” a psychology-driven “appetite neuron,” and a “feeding circuit.”

Subir Ghosh, a PhD student in engineering science and mechanics, and Andrew Pannone, a graduate research assistant in engineering science and mechanics, work in a lab at Penn State’s University Park campus’ Millennium Science Complex. Das Research Lab / Penn State

For example, when detecting salt or sodium chloride, the gadget detects sodium ions, according to Subir Ghosh, a doctorate student in engineering science and mechanics and study co-author.

“This means the device can ‘taste’ salt,” explained Ghosh.

In building the artificial gustatory system, the features of the two different 2D materials complement each other.

“We used two separate materials because, while graphene is an excellent chemical sensor, it is not great for circuitry and logic, which is needed to mimic the brain circuit,” said Andrew Pannone, co-author of the paper and a graduate research assistant in engineering science and mechanics. “As a result, we employed molybdenum disulfide, which is also a semiconductor.” We used the strengths of these nanomaterials to develop a circuit that replicates the gustatory system by mixing them.”

The method is adaptable enough to be used on all five basic flavor profiles: sweet, salty, sour, bitter, and umami. According to Das, such a robotic gustatory system offers a wide range of possible applications, from AI-curated diets based on emotional intelligence for weight loss to individualized meal selections at restaurants. The next goal for the research team is to increase the flavor range of the electronic tongue.

“We are trying to make arrays of graphene devices to mimic the 10,000 or so taste receptors we have on our tongue that are each slightly different compared to the others, which enables us to distinguish between subtle differences in tastes,” Das went on to say. “I think of people who train their tongues to become wine tasters.” Perhaps in the future, we’ll be able to educate an AI system to be a better wine taster.”

A further step would be to create an integrated gustatory chip.

“We want to fabricate both the tongue part and the gustatory circuit in one chip to simplify it further,” he stated. “That will be our primary focus for the near future in our research.”

Following that, the researchers stated that they envision gustatory emotional intelligence in an AI system transferring to other senses, such as visual, audio, tactile, and olfactory emotional intelligence, to aid in the creation of future advanced AI.

“The circuits we have demonstrated were very simple, and we would like to increase the capacity of this system to explore other tastes,” Pannone went on to say. “However, we want to introduce other senses, which would necessitate different modalities, as well as possibly different materials and/or devices.” These rudimentary circuits could be modified and made to more accurately mimic human behavior. Also, as we learn more about how our own brain functions, we will be able to improve this technology.”

Download The Radiant App To Start Watching!

Web: Watch Now

LGTV™: Download

ROKU™: Download

XBox™: Download

Samsung TV™: Download

Amazon Fire TV™: Download

Android TV™: Download