Microsoft launched a more secure version of its AI-powered Bing for businesses on Tuesday, ensuring professionals that they can safely communicate potentially sensitive information with a chatbot.

According to the business, the user’s conversation data would not be saved, transferred to Microsoft’s servers, or used to train AI models using Bing conversation Enterprise.

“What this [update] means is that your data doesn’t leak outside the organization,” Yusuf Mehdi, Microsoft’s vice president and consumer chief marketing officer, told CNN. “We never combine your data with web data, and we never save it without your permission.” So no data is saved on the servers, and none of your data chats are used to train the AI models.”

RELATED: Chinese Hackers Target Critical US Bases On Guam, According To Microsoft

Since ChatGPT’s launch late last year, a new crop of strong AI products has promised to increase worker productivity. However, in recent months, some companies, such as JPMorgan Chase, have prohibited their employees from using ChatGPT, claiming security and privacy issues. Other large corporations have apparently taken similar procedures in response to concerns about sharing sensitive information with AI chatbots.

After OpenAI disclosed a fault that allowed certain users to read the subject lines from other users’ conversation history, Italian officials issued a temporary ban on ChatGPT in the country in April. The same flaw, which has since been resolved, also allowed “some users to see another active user’s first and last name, email address, payment address, the last four digits (only) of a credit card number, and credit card expiration date,” according to a blog post by OpenAI at the time.

Microsoft, like other tech companies, is racing to create and launch a suite of AI-powered tools for consumers and professionals, despite strong investor interest in the new technology. Microsoft also announced on Tuesday that it will expand its AI-powered Bing Chat tool to include visual searches. In addition, the firm stated that the previously announced AI-powered tool that helps edit, summarize, produce, and compare documents across its multiple products, Microsoft 365 Co-pilot, will cost $30 per month for each user.

If a company’s IT department manually switches on Bing Chat Enterprise, it will be free for all of its 160 million Microsoft 365 members starting on Tuesday. After 30 days, however, Microsoft will automatically grant access to all users; subscription firms can disable the tool if they so desire.

RELATED: A Microsoft-Backed Technology Group Is Pushing For AI Regulation: Here’s What It Implies:

Rethinking artificial intelligence chatbots for the workplace

Current conversational AI solutions, such as Bing Chat’s consumer version, send data from personal interactions to their servers in order to train and develop their AI model.

Microsoft’s new enterprise alternative is identical to the consumer version of Bing, but it will not remember user interactions, so users will have to start over each time. (On its consumer chat model, Bing has lately begun to support stored talks.)

With these modifications, Microsoft, which utilizes OpenAI’s technology to power its Bing conversation service, claims that employees may have “complete confidence” that their data “won’t be leaked outside of the organization.”

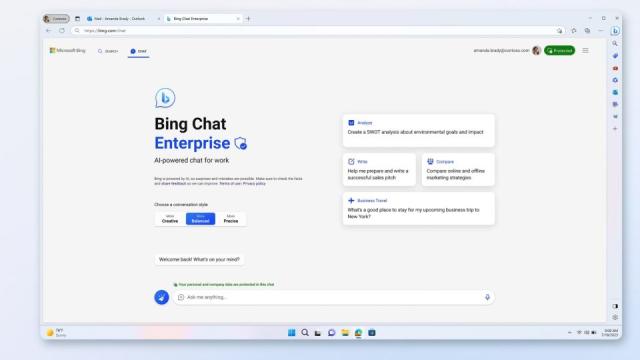

According to Microsoft, to utilize the tool, a user will enter into the Bing browser with their work credentials, and the system will automatically detect the account and place it in a protected mode. The text above the “ask me anything” box states, “Your personal and company data are protected in this chat.”

Microsoft demonstrated how a user may type personal secrets into Bing Chat Enterprise in a demo video presented to CNN ahead of its launch, such as someone discussing financial information as part of preparing a bid to acquire a building. With the new capability, the user might request that Bing Chat construct a table comparing the property to other nearby buildings and write an analysis highlighting the benefits and flaws of their bid in comparison to other local bids.

Mehdi addressed the issue of factual mistakes in addition to attempting to alleviate privacy and security worries about AI in the workplace. He urged that users create clearer, better prompts and double-check the linked citations to limit the risk of mistakes or “hallucinations,” as some in the industry describe it.

Download The Radiant App To Start Watching!

Web: Watch Now

LGTV™: Download

ROKU™: Download

XBox™: Download

Samsung TV™: Download

Amazon Fire TV™: Download

Android TV™: Download