In what was billed as a step toward mind reading, scientists claimed on Monday that they had discovered a way to utilize brain scans and artificial intelligence modeling to transcribe “the gist” of what individuals are thinking.

While aiding those who have lost their ability to communicate is the primary objective of the language decoder, US scientists acknowledged that the technology raised concerns about “mental privacy”.

They conducted studies to demonstrate that their decoder could not be used on anyone who had not permitted it to be trained on their brain activity over a period of time in a functional magnetic resonance imaging (fMRI) scanner, in an effort to allay such concerns.

Previous studies have demonstrated that a brain implant can allow individuals who are unable to talk or type to spell words or even complete phrases.

These so-called “brain-computer interfaces” concentrate on the area of the brain that manages the mouth’s attempt to produce words.

The language decoder used by Alexander Huth’s team “works at a very different level,” according to Huth, a co-author of the current study and a neuroscientist at the University of Texas at Austin.

Kendall Jenner wore a see-through crop top and a tiny miniskirt while out with Bad Bunny.

According to a study published in the journal Nature Neuroscience, it is the first system to be able to rebuild continuous language without the use of an invasive brain implant.

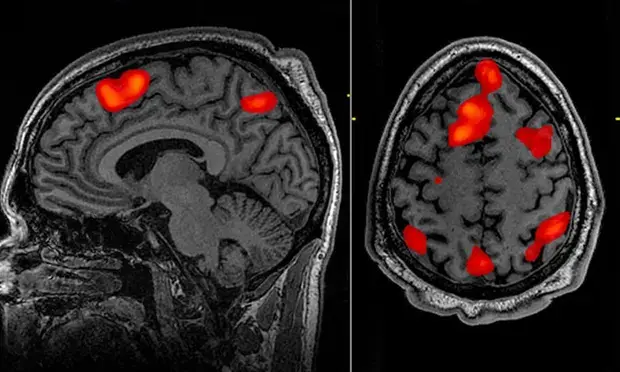

‘Deeper than words’ – Three participants in the study listened to spoken narrative stories for a total of 16 hours while they were inside an fMRI machine, largely podcasts like the New York Times’ Modern Love.

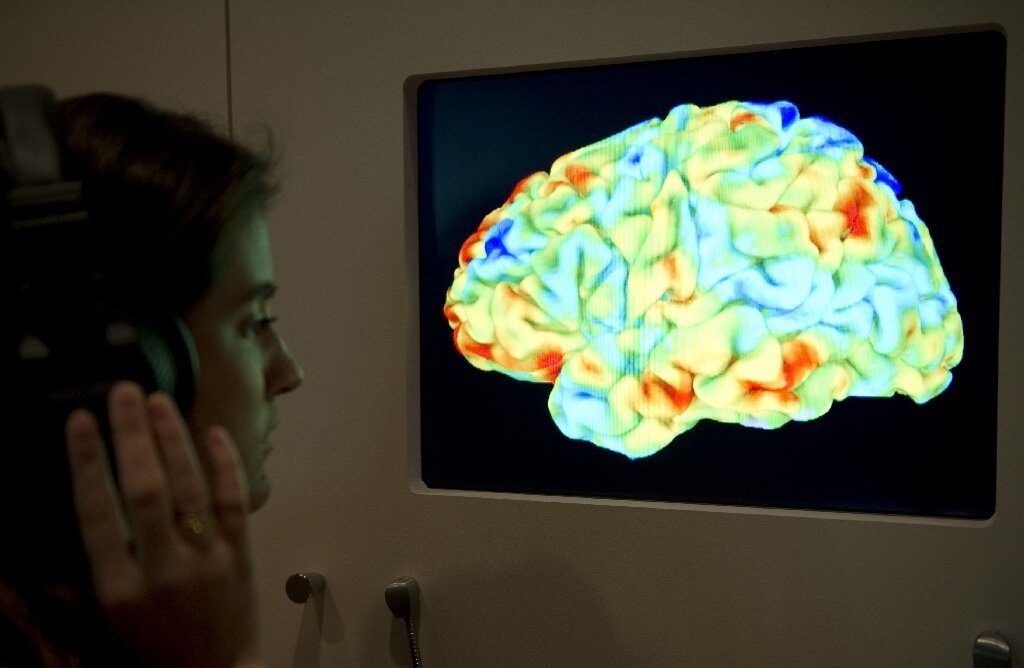

This gave the researchers the opportunity to visualize how words, phrases, and meanings elicited reactions in the parts of the brain associated with language processing.

They incorporated this information into a neural network language model using GPT-1, the forerunner of the AI system ultimately used in the enormously successful ChatGPT.

The model was trained to anticipate how each person’s brain would process speech, then it was trained to eliminate choices until it came up with the most suitable response.

Each participant then listened to a fresh story while being tested to see how accurate the model was.

The decoder could “recover the gist of what the user was hearing,” according to the study’s first author Jerry Tang.

For instance, the model responded with “she has not even started to learn to drive yet” when the participant said, “I don’t have my driver’s license yet.”

The researchers acknowledged that personal pronouns like “I” or “she” were difficult for the decoder to handle.

The decoder, however, was still able to understand the “gist,” according to the participants, even whether they made up their own stories or watched silent movies.

This demonstrated that “we are decoding something deeper than language, then converting it into language,” according to Huth.

Since fMRI scans are too slow to record individual words, they instead gather a “mishmash, an agglomeration of information over a few seconds,” according to Huth.

Even though the precise words are gone, we can still observe how the notion develops.

A bioethics expert at Spain’s Granada University, David Rodriguez-Arias Vailhen, who was not involved in the research, said it went beyond what had been accomplished by prior brain-computer interfaces.

We are getting closer to a time when computers will be able to “read minds and transcribe thought,” he warned, adding this might happen against people’s will, including while they are sleeping.

Such worries were anticipated by the researchers.

Download The Radiant App To Start Watching!

Web: Watch Now

LGTV™: Download

ROKU™: Download

XBox™: Download

Samsung TV™: Download

Amazon Fire TV™: Download

Android TV™: Download