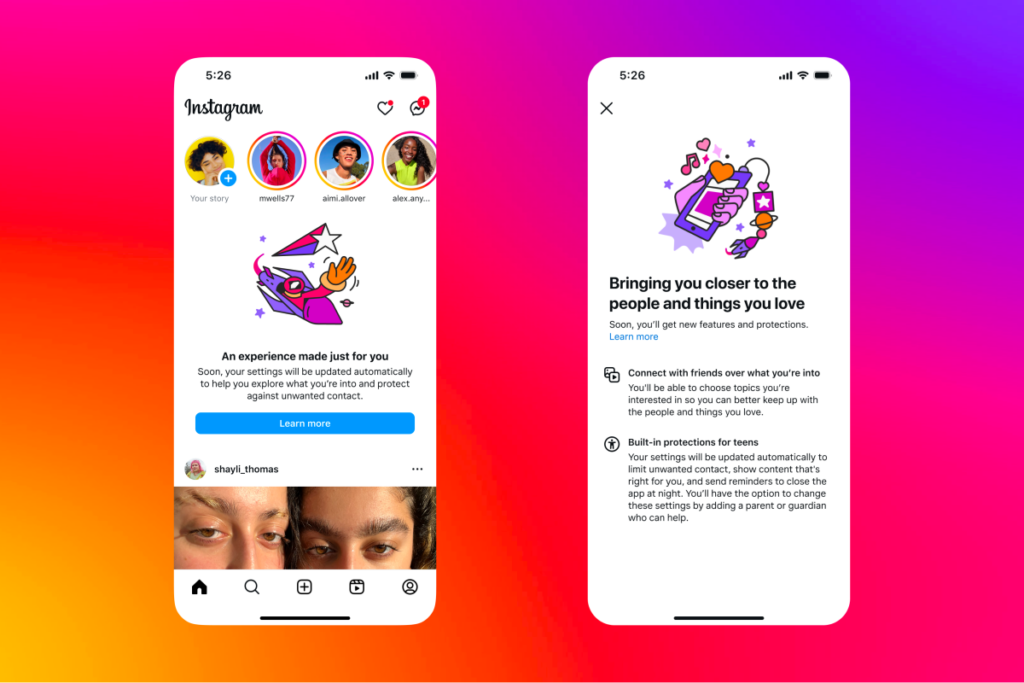

Finally, Instagram provides some safeguards to protect children. The enormous social media platform is launching new separate accounts for users under the age of 18, with the goal of making the platform safer for young people as concerns about social media’s impact on mental health grow.

Starting Tuesday in the United States, United Kingdom, Canada, and Australia, new users under the age of 18 will be automatically placed in these more restrictive accounts, with existing teen accounts converted over the next 60 days. Similar modifications will be made for teenagers in the European Union later this year.

Meta is attempting to get ahead of the youngsters by circumventing the upcoming protections. The corporation accepts the danger of youngsters misrepresenting about their age and has announced that more frequent age verification will be required. The corporation is also developing technologies to detect accounts where minors are pretending to be adults and instantly move them to limited teen accounts. These accounts will be private by default, and teens will be able to send direct messages only to persons they already follow. In addition, “sensitive content” will be limited, and teens will receive notifications if they spend more than 60 minutes on the platform. A “sleep mode” option turns off notifications and sends auto-replies between 10 p.m. and 7 a.m.

READ MORE: Shannon Sharpe Blames Hackers After An Instagram Live Sex Video Goes Viral

Furthermore, teens aged 16 and 17 can opt out of some of these restrictions, although users under 16 require parental permission to do so. Naomi Gleit, Meta’s head of product, commented, “The three issues we’re hearing from parents are that their adolescents are seeing information that they don’t want to see, being contacted by people they don’t want to be contacted by, and spending too much time on the app. So teen accounts are very focused on tackling those three issues.”

READ MORE: Instagram Launches A Live Test Of Friend Map Location Sharing

This move comes at a time when Meta is facing lawsuits from numerous states saying that the firm contributed to the teenage mental health crisis by developing features that children find addictive. New York Attorney General Letitia James praised Meta’s measures as “an important first step,” but underlined that more must be done to safeguard minors from social media harm. While Meta has previously implemented features such as time-limit notifications, these have been criticized for not being harsh enough, as teenagers may easily evade them without parental monitoring.

Basically, with the new teen accounts, Meta gives parents more ways to monitor their children’s Instagram activities. If parental monitoring is enabled, parents may limit their teens’ screen time and see who is messaging their child, giving them an opportunity to discuss online safety. Gleit continued: “Parents will be able to see, via the family center, who is messaging their teen and hopefully have a conversation with their teen.”

This is a positive thing regardless of how you slice it.

Radiant TV, offering to elevate your entertainment game! Movies, TV series, exclusive interviews, music, and more—download now on various devices, including iPhones, Androids, smart TVs, Apple TV, Fire Stick, and more.